Code is Cheap, Trust is Expensive

Many teams using AI ship more code. Not all of them are shipping better software.

Look at the metrics — cycle time, velocity, features delivered. If AI can generate most of the code, you might expect teams to be three or four times faster. Often, they’re not. The improvement is smaller than expected.

The tools aren’t the limiting factor. The system around them is.

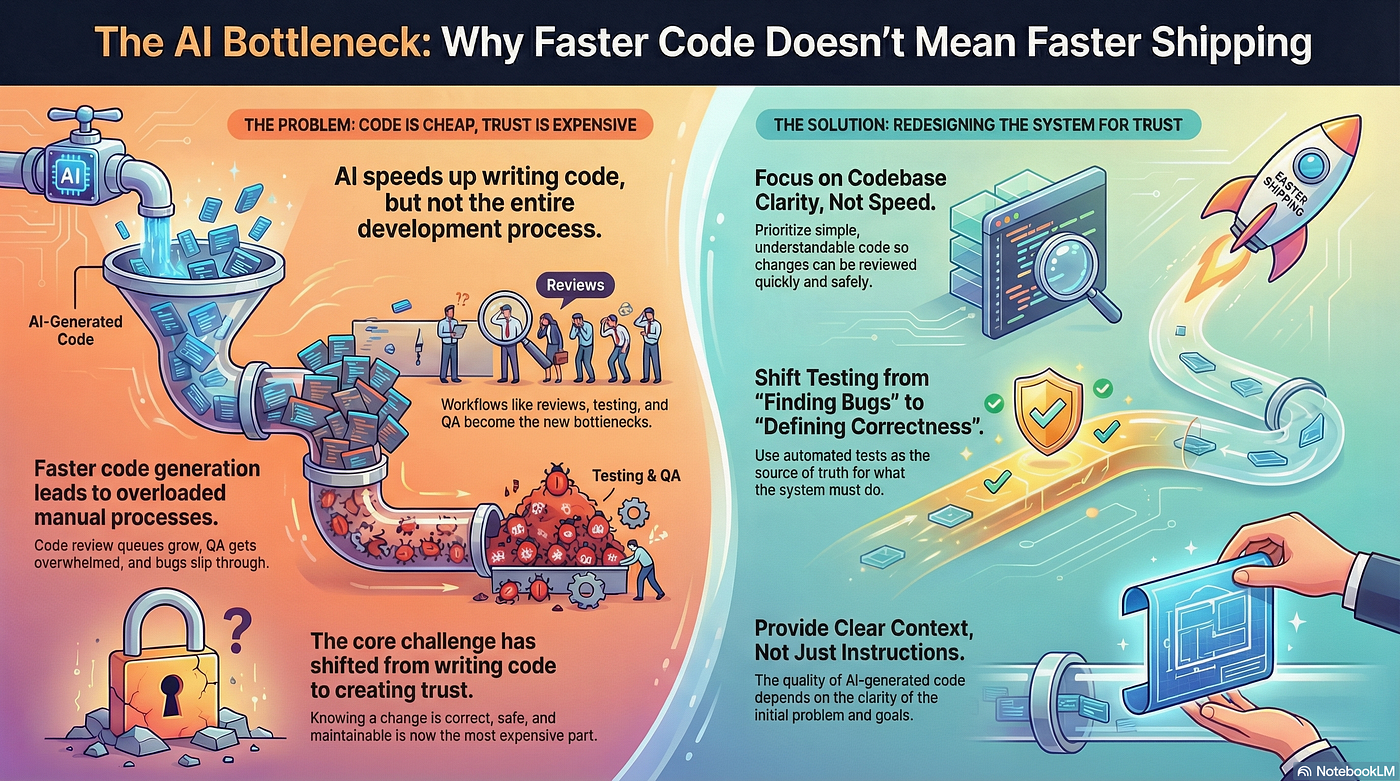

The Bottleneck Moved

AI accelerates one part of the process: writing code. But software development is more than writing code. It’s architecture, testing, reviews, QA, and release. These parts are interconnected. When only one part speeds up, bottlenecks shift to the parts that weren’t designed for this speed.

In my team — a system that evolved from monolith to monorepo over several years — we saw this firsthand. Engineers started generating more code. PR volume went up. But review capacity stayed the same. PRs queued up. Reviewers skimmed instead of reading. Bugs slipped through. The team felt faster, but cycle time didn’t improve. The bottleneck just moved.

The problem wasn’t AI. Everything around code generation — how we review, how we test, how we define what to build — was still designed for a world where writing code was the slow part.

Trust Became the Expensive Part

Before AI, writing code was the slow and expensive part. Most processes were built to protect that cost — long reviews, heavy planning upfront, manual testing at the end.

That assumption is broken. AI generates working code quickly. Creating code is no longer the constraint.

What became expensive is everything after: understanding what changed, knowing if the change is correct, knowing if it’s safe to deploy. Teams still own the consequences. Bugs in production. Security issues. Performance regressions. AI generates the code and moves on. The humans carry the risk.

More activity doesn’t mean more progress.

Structure Is a Safety Mechanism

Structure is not about elegance. In the AI era, structure is a safety mechanism.

A codebase must help humans answer one question quickly: “What does this change affect?” If that answer is unclear, trust breaks down.

The best practice I have found is vertical slices with strict dependency rules. Each module owns its domain, exposes a clear public API, and prevents cross-module direct imports. Domain never depends on infrastructure. When a change in one module can’t silently break another, reviewers can focus on intent instead of tracing side effects.

Without conventions, every AI generation drifts. The same problem gets solved three different ways across three files. With conventions defined in rules files and project instructions, the AI follows your design language instead of guessing. Consistent patterns make the system easier to trust, test, and review.

Tests Define Correctness

When code becomes cheap, testing becomes more important — not less.

AI makes it easy to introduce changes that look correct and still break important behavior. Tests shift from catching bugs to defining what it means for the system to be correct. They become the contract between past and future changes. When AI generates code, tests are what allow a reviewer to say “this is safe” without reading every line.

Quality must move earlier in the process. If validation happens late — manual QA at the end, reviews days after the PR opens — the system chokes. QA moves from clicking through pages to find bugs, to defining what should be tested. QA identifies risk areas and designs test scenarios. AI generates the test code and executes the checks. The hours are spent differently — designing rules instead of executing manual checks.

Context Replaces Instructions

AI didn’t just make code generation faster. It made unclear thinking more expensive.

The most important input to the system is no longer tasks, tickets, or step-by-step instructions. It’s context — why this exists, what problem it solves, what constraints matter, and what success looks like. Without context, AI produces code that’s technically correct but conceptually wrong.

This changes how product and engineering interact. From my experience leading architecture decisions across multiple teams, the best results come when engineers participate in definition — not just execution. Product brings the problem. Engineering brings the constraints. The collaboration happens before the AI writes a single line.

Keep documentation in the code — project instructions, rules files, architecture decision records. AI can read them. New team members can read them. The context is alive, not buried in a wiki nobody checks.

Ownership Must Be Explicit

When AI increases individual leverage, one engineer covers more ground. This reduces some coordination costs. But it also concentrates responsibility.

If AI only generates code, smaller teams face a bottleneck: who reviews all this output? AI assists review too — checking code against rules, summarizing changes, flagging risks. Humans decide what to focus on and whether the intent is correct. AI handles volume. Humans handle judgment.

Module ownership, clear boundaries, reduced change amplification — these are architecture decisions, but they’re also team decisions. Without clear ownership, faster execution creates confusion, not progress.

The Real Question

The teams I’ve seen succeed don’t just adopt AI for code generation. They redesign the system around it. Structure that supports local reasoning. Tests that define correctness. Clear product context. Explicit ownership.

The teams still waiting for the payoff changed only one part of the process. The rest stayed the same.

The real question isn’t how fast AI can write code. It’s how fast your system can trust the changes.